- jaro education

- 8, January 2024

- 1:08 pm

Data engineering stands as a robust and rapidly evolving field, teeming with unexplored possibilities. LinkedIn’s Emerging Jobs Report shows that the data science market is poised for exponential growth, forecasted to surge from $37.9 billion in 2019 to $230.80 billion by 2026, as per the latest reports by TOI. This remarkable trajectory not only highlights the field’s significance but also underscores the burgeoning demand for skilled professionals in the domain.

Data engineering is a compelling destination for aspiring IT enthusiasts seeking a resilient and enduring career path. However, venturing into a new discipline, especially one as intricate as data engineering, can be a daunting task. The key to overcoming this challenge lies in the formulation and execution of a well-crafted educational plan—a roadmap, to be specific.

This article serves as a comprehensive guide to crafting a Data engineer Roadmap for 2024. We will delve into the essence of a data science roadmap, exploring its diverse components, pivotal milestones, strategies for tracking progress, and a wealth of additional resources essential for navigating the intricate terrain of data engineering.

What is Data Engineering

Data engineering is a critical field in the IT sector, focusing on preparing data for analytical or operational uses. Data engineers are primarily responsible for building data pipelines to integrate, consolidate, cleanse, and structure data from various sources for use in analytics applications. They work to make data easily accessible and optimize their organization’s big data ecosystem. Their work is crucial in enabling businesses to make more trustworthy decisions through improved data transparency.

Data engineers work alongside data scientists, providing them with usable data formats for predictive analytics, machine learning, and data mining applications. They deal with both structured and unstructured data, requiring an understanding of various data architectures and big data technologies. Now, let’s understand the evolution of Data engineering and how it evolved with modern technology.

Evolution of Data Engineering

The evolution of data engineering has been a journey marked by significant shifts in database technologies and methodologies. Initially, data management was manual and error-prone, relying heavily on paper-based systems. The introduction of hierarchical and network databases marked early attempts to structure data, but they had limitations in representing complex relationships and accommodating evolving data structures. Here are some crucial milestones of the data engineering evolution journey:

Table of Contents

Rise of Relational Databases

The development of relational databases was a major shift. These databases used tables to structure data and were easier to manipulate and retrieve data from. They supported complex queries and established relationships between entities, forming the foundation for modern data engineering practices.

Limitations of Relational Databases

Despite their advancements, relational databases faced challenges with scalability, particularly with the vertical scaling model. They struggled with complex data structures and evolving business needs and faced an impedance mismatch with object-oriented programming.

The advent of NoSQL Databases

As datasets grew and diversified, the limitations of relational databases led to the emergence of NoSQL databases. These offered a more flexible and scalable alternative, accommodating various data types and structures, including document-oriented, key-value, column-family, and graph databases.

Polyglot Persistence and Multi-Model Databases

With increasing diversity and complexity in data engineering needs, polyglot persistence has become crucial. It involves using multiple database technologies tailored to specific data models within an application ecosystem. Multi-model databases emerged, supporting various data models within a unified framework and offering a more versatile solution.

NewSQL Databases

Representing a middle ground between traditional relational databases and modern distributed systems, NewSQL databases addressed the limitations of traditional RDBMS while maintaining data integrity and supporting large-scale, concurrent user demands.

Role and Responsibilities of Data Engineers

Data engineers are pivotal in managing and preparing data for utilization by data scientists and analysts. Their roles can be classified into three distinct categories:

Generalist Data Engineers

These professionals are often part of smaller teams, handling comprehensive data management tasks from collection to processing. Typically possessing a broad skill set, they may have less expertise in systems architecture compared to other data engineers. For a data scientist transitioning to a data engineering role, the generalist position is a suitable starting point.

An example of their work could include developing a dashboard for a local food delivery service that tracks daily delivery numbers and predicts future delivery volumes.

Pipeline-Centric Data Engineers

Operating primarily within midsize analytics teams, these engineers tackle more intricate projects, often spanning across distributed systems. This role is common in medium to large-sized organizations. For instance, in a regional food delivery company, a pipeline-centric data engineer might develop a tool to assist data scientists and analysts in retrieving delivery-related metadata. This tool could analyze variables such as delivery distances and times to aid in predictive analysis for future business planning.

Database-Centric Data Engineers

These engineers focus on the implementation, maintenance, and updating of analytics databases, a role typically found in larger organizations where data is spread across multiple databases. Their responsibilities include managing data pipelines, optimizing databases for analysis, and developing table schemas through ETL (Extract, Transform, Load) processes.

Responsibilities of Data Engineers

Data engineers collaborate with data science teams, playing a pivotal role in refining and presenting data for analytical purposes. They transform raw data into formats that data scientists can utilize for executing queries and algorithms. This process is essential for activities such as predictive analytics, machine learning, and data mining. Moreover, data engineers consolidate data for various stakeholders, including business leaders and analysts, facilitating their ability to conduct analyses and leverage these insights for optimizing business operations.

In their role, data engineers handle both structured and unstructured data types. Structured data refers to information that can be systematically organized in a database or similar structured format. Conversely, unstructured data, which includes formats like text, images, and audio-video files, does not fit into traditional database models. To manage these diverse data types, data engineers need a comprehensive understanding of various data architectures and applications. They also utilize an array of big data tools, including open-source frameworks for data ingestion and processing, to manage and utilize data effectively.

Skill Set Required to Excel as a Data Engineer

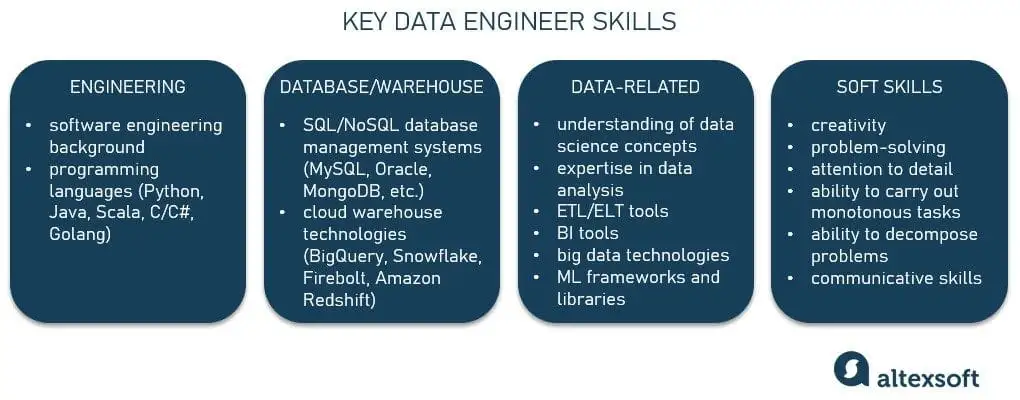

Data engineering is a booming field; however, it is not as easy as it seems; it requires lots of learning and practice to excel in this career. Here are some skill sets you should look forward if you want to be a data engineer:

*altexsoft.com

SQL

Mastery of SQL is fundamental for managing relational database management systems (RDBMS). This involves not just memorizing queries but understanding how to issue optimized ones.

Data Warehousing

Proficiency in building and utilizing data warehouses is crucial. Data warehousing helps in aggregating unstructured data from various sources for improved business operation efficiency.

Data Architecture

Data engineers need expertise in constructing complex database systems and managing data in motion and at rest, as well as its interrelations with dependent processes and applications.

Coding

Proficiency in programming is essential for integrating databases with various applications (web, mobile, desktop, IoT). Key languages include Java or C# for different tech stacks and Python and R for diverse data-related operations.

Operating Systems

Familiarity with operating systems such as UNIX, Linux, Solaris, and Windows is necessary.

Apache Hadoop-Based Analytics

Knowledge of Apache Hadoop is important for the distributed processing and storage of datasets. Skills in Hadoop, HBase, and MapReduce are beneficial for a range of data operations.

Machine Learning

While closely associated with data science, understanding machine learning for statistical analysis and data modeling is advantageous for data engineers.

Tools for Data Engineers

To excel as a data engineer, a comprehensive knowledge of certain tools that encompass various aspects of data management, processing, and analysis is required. Here are the key tools needed:

Database Tools

Proficiency in SQL and NoSQL databases is crucial for managing large volumes of structured, semi-structured, and unstructured data. Familiarity with database design and architecture and tools like MySQL, PL/SQL, Cassandra, and MongoDB is essential.

Data Transformation Tools

Data engineers must be skilled in transforming raw data into a consumable format. This involves using tools such as Hevo Data, Matillion, Talend, Pentaho Data Integration, and InfoSphere DataStage.

Data Ingestion Tools

Understanding and utilizing data ingestion tools and APIs, such as Apache Kafka, Apache Storm, Apache Flume, Apache Sqoop, and Wavefront, is vital for moving data effectively from various sources to a central system for analysis.

Data Mining Tools

Data mining is crucial for extracting information, finding patterns, and preparing large data sets for analysis. Tools like Apache Mahout, KNIME, Rapid Miner, and Weka are important in this aspect.

Data Warehousing and ETL Tools

Knowledge of data warehousing and ETL (Extract, Transform, Load) processes is necessary. ETL tools such as Talend, Informatica PowerCenter, AWS Glue, and Stitch are commonly used for this purpose.

Real-time Processing Frameworks

Skills in real-time data processing frameworks like Apache Spark, Hadoop, Apache Storm, and Flink are essential for generating quick insights from real-time data.

Data Buffering Tools

Data buffering is crucial for managing streaming data from multiple sources. Tools such as Kinesis, Redis Cache, and GCP Pub/Sub are commonly used.

Machine Learning Tools

Integrating machine learning into big data processing requires a strong foundation in mathematics and statistics and knowledge of tools like SAS, SPSS, R, etc.

Cloud Computing Tools

Setting up and managing cloud infrastructure is a key skill. Familiarity with AWS, Azure, GCP, OpenStack, Openshift, and similar platforms is necessary.

Tools for Data Visualization

The ability to present data insights in a consumable format is important. Visualization tools like Tableau, Qlik, Tibco Spotfire, and Plotly are widely used.

How to Become a Data Engineer?

Data engineering has no straight or single path to follow. Becoming a data engineer is a combination of education, technical and soft skill sets, knowledge of tools, and experience. However, here are certain parameters you must keep in mind to become a data engineer:

Education

Obtain a bachelor’s degree in computer science, software engineering, or a related field. Mathematics or statistics degrees are also beneficial. Consider a master’s degree in data science for advanced knowledge.

Technical Skills

Develop proficiency in Python, Java, and SQL programming languages. Acquire expertise in big data technologies such as Hadoop, Spark, and Kafka, and familiarize yourself with cloud platforms like AWS, Azure, or Google Cloud Platform.

Portfolio Development

Gain practical experience by engaging in open-source projects, hackathons, and coding competitions. This helps in building a portfolio to demonstrate your skills and experiences.

Database Management Knowledge

Learn about database management, data modeling, and data warehousing, focusing on both SQL and NoSQL database systems.

Continuous Learning

Stay updated with evolving trends and technologies in data engineering. Participate in conferences, webinars, and workshops to learn new skills and network with peers.

Certification for Data Engineering

Here is a list of top data architect and data engineer certifications in 2023, each with a brief description:

IIT Delhi provides the Executive Programme in Applied Data Science using Machine Learning and Artificial Intelligence With Jaro Education. This program empowers executives and professionals with essential skills, offering a thorough grasp of data science principles, machine learning algorithms, and AI techniques. Participants gain practical insights to apply these skills effectively in real-world situations.

Enhance your data science skills with IIM Kozhikode’s Professional Certificate in Data Science for Business Decisions. Tailored for tech professionals and managers at all levels, the program provides comprehensive learning in supervised and unsupervised learning, social media analytics, big data technologies, with a focus on practical applications in business and management, rather than programming.

This program is tailored for mid-management to CXO-level executives, focusing on cyber security knowledge for systematic risk assessment and management. Emphasizing versatility over tool reliance, it also develops communication, leadership, strategy, team management, governance, and core concepts for a comprehensive understanding of cybersecurity in a rapidly evolving landscape.

The Online Master of Science (Data Science) offered by Symbiosis School for Online and Digital Learning (SSODL) offers a comprehensive framework for understanding the data science life cycle, statistical foundations, technologies, and applications.

Here is a list of top data architect and data engineer certifications in 2023, each with a brief description:

IIT Delhi provides the Executive Programme in Applied Data Science using Machine Learning and Artificial Intelligence With Jaro Education. This program empowers executives and professionals with essential skills, offering a thorough grasp of data science principles, machine learning algorithms, and AI techniques. Participants gain practical insights to apply these skills effectively in real-world situations.

Enhance your data science skills with IIM Kozhikode’s Professional Certificate in Data Science for Business Decisions. Tailored for tech professionals and managers at all levels, the program provides comprehensive learning in supervised and unsupervised learning, social media analytics, big data technologies, with a focus on practical applications in business and management, rather than programming.

This program is tailored for mid-management to CXO-level executives, focusing on cyber security knowledge for systematic risk assessment and management. Emphasizing versatility over tool reliance, it also develops communication, leadership, strategy, team management, governance, and core concepts for a comprehensive understanding of cybersecurity in a rapidly evolving landscape.

The Online Master of Science (Data Science) offered by Symbiosis School for Online and Digital Learning (SSODL) offers a comprehensive framework for understanding the data science life cycle, statistical foundations, technologies, and applications.

Data Engineer: Career Path

Here is what the career path of data engineering looks like; however, it also depends on experience, education, and technical skills:

Entry-Level Data Engineer

Starts with a computer science degree, gaining initial experience in programming, databases, and big data technologies, working under experienced engineers.

Junior Data Engineer

Progresses with experience in data engineering, enhancing skills in programming and big data technologies, handling complex projects with greater responsibility, etc.

Senior Data Engineer

Brings years of experience with multiple programming languages and big data technologies, leading projects and teams, and designing sophisticated data solutions.

Lead Data Engineer

Demonstrates extensive data engineering experience and leadership, overseeing teams and responsible for enterprise-wide data solutions.

Data Architect

Focuses on designing and implementing data architectures aligned with business goals, collaborating with stakeholders and engineers to ensure scalable, reliable, and secure solutions.

Data Infrastructure Manager

Manages the organization’s data infrastructure, including databases and big data technologies, coordinating with IT departments for integrated solutions.

Chief Data Officer

A top executive role, overseeing data strategy and its effective use to meet business objectives, leading data engineering, analytics, and governance, and collaborating with other executives.

Salary of Data Engineers in India

The salary range for Data Engineers in India spans from ₹3.1 Lakhs to ₹20.0 Lakhs, with an average annual salary of ₹9.8 Lakhs. These salary figures are derived from a comprehensive analysis of the latest compensation data, encompassing 27.6 thousand reported salaries from professionals working in the field of Data Engineering. This statistical representation provides a robust overview of the remuneration landscape for Data Engineers in the Indian job market, illustrating the diversity in earnings and establishing a benchmark for prospective professionals in the industry.

*ambitionbox.com

Emerging Technology in Data Engineering

Data engineering is continuously evolving, and new technologies are emerging. Here are some technologies that every data scientist should know to be ahead of the curve. Each technology has unique features and benefits that will help you make better data-driven decisions.

Apache Superset

Launched in 2016 by Airbnb and open-sourced in 2017, Apache Superset is an advanced, open-source platform for data visualization and exploration. It enables businesses to analyze and visualize data from various sources in real-time. Known for its scalability, Apache Superset efficiently handles large data volumes without compromising performance, making it a widely adopted tool in the business and organizational sectors.

Apache Iceberg

Developed by Netflix, Apache Iceberg is an innovative, open-source platform for data storage and query processing, released to the public in 2018. It supports diverse workloads, including batch and interactive processing, machine learning, and ad-hoc queries. Its design focuses on modern, scalable, and efficient large dataset management.

Great Expectations

This open-source Python library, first introduced in October 2019, offers a suite of tools for testing and validating data pipelines. Great Expectations allows users to set “expectations” for their data, which are essentially rules or constraints on pipeline performance. These can range from basic checks for missing values to complex constraints like specific column correlations. Great Expectations also provides tools for visualizing and documenting data pipelines, simplifying the understanding and troubleshooting of intricate data workflows.

Delta Lake

Debuted in 2019 by Databricks, Delta Lake is an open-source storage layer that enhances the reliability, scalability, and performance of data lakes. Gaining rapid popularity in the data community, it ensures data reliability atop Apache Spark by incorporating a transactional layer. This layer guarantees atomic and consistent data updates, positioning Delta Lake as a critical tool for effective data lake management and maintenance.

ChatGPT

Introduced by OpenAI in June 2020, it is a substantial language model built on the GPT-3.5 framework. Its primary function is to produce responses that closely resemble human conversation based on natural language inputs. This model has the proficiency to comprehend and respond in various languages. Additionally, it can be specifically tailored for particular domains or tasks, enhancing its effectiveness. For data engineers, ChatGPT offers valuable capabilities like text categorization, sentiment analysis, and language translation, aiding in extracting insights from unstructured data.

Future Trends and Prediction in Data Engineering: 2024

1. Expanding Cloud Adoption

Companies are increasingly transitioning their IT infrastructures and data to cloud platforms like Amazon AWS and Microsoft Azure, indicating a surge in demand for cloud-based data engineering.

2. Increased Investment in Financial Operations (FinOps)

There’s a growing focus on optimizing cloud costs, with financial experts playing a key role in ensuring cost-effective data engineering strategies.

3. Segmentation Based on Data Usage

A shift from unified data warehouses to more versatile data lakes is occurring, allowing for more efficient and performance-oriented data management.

4. Specialized Data Teams

Data teams are evolving to include a broader range of specialists, such as data architects and data product managers, to manage various aspects of data architecture.

5. Implementation of Data Mesh

This innovative architectural approach decentralizes data management, aiming for more efficient and autonomous data handling.

6. Bidirectional Cloud-Premises Data Architecture

Modern data engineering is moving towards a two-way data flow system, enhancing synchronization across applications.

7. Rise of Data Contracts

Data contracts, functioning similarly to service level agreements, are becoming more common to ensure quality and accuracy in data handling.

8. Quicker Resolution of Data Engineering Issues

Leveraging AI and ML for faster identification and resolution of data anomalies is a key trend, leading to more accurate insights.

9. Demand for User-Friendly Development Platforms

Low-code and no-code platforms are becoming popular, catering to non-technical professionals and facilitating easier application development.

Final Thoughts

Behind every exciting glimpse into the future, whether near or far, there’s always a data engineer making them happen. Let’s take self-driving cars as an example—they’re not so rare anymore, and soon, they might just be the usual way to get around. That’s because these professionals are working hard to improve this technology. As these kinds of ideas become more common, the need for people who know how to handle all that data, called data engineers, is going to get even bigger.