- jaro education

- 7, March 2024

- 10:00 am

Predictive analytics refers to various statistical and data mining techniques that analyze current and historical data to make informed predictions about future outcomes. Leveraging both structured and unstructured big data predictive analytics drives competitive advantage across functions like marketing, sales, operations, and finance. It powers data-driven decision-making by uncovering patterns and customer insights that would be non-intuitive otherwise.

While descriptive analytics focuses on reporting what has already happened, predictive analytics forecasts what could happen in the future. Its techniques include predictive modeling, machine learning, statistical modeling, data mining, forecasting, and optimization, which help organizations prepare better for multiple scenarios.

This blog provides a deep insight into popular data science methods and best practices for developing accurate and robust predictive analytics models.

Overview of the Predictive Modeling Process

By utilizing data science techniques like statistical modeling, machine learning, and artificial intelligence, predictive analytics can predict future changes from historical information before they become obvious and offer a significant competitive advantage. Even though there is uncertainty in outcomes, constantly improving methods and tracking their success allows to tackle the problems caused by the development of new ideas.

Table of Contents

The typical cross-functional predictive modeling process consists of:

Defining Objectives

Frame the business problem by identifying key performance metrics and success criteria.

Data Collection

Gather, clean, and combine relevant structured and unstructured training data from disparate sources, including sensors, business applications, social media feeds, and other external sources.

Exploratory Analysis

Visually explore and analyze the training data to gain preliminary insights on trends, variables, dependencies, and relationships. Techniques like correlation analysis are used in this step.

Feature Engineering

Derive aggregated or new variables by transforming raw data for better predictive performance. While going through this step, domain knowledge helps create meaningful features.

Model Development

Build and train predictive models testing multiple algorithms like neural networks, regression, decision trees, etc., based on problem complexity. A combination of models may be used.

Model Evaluation

Thoroughly test models on hold-out evaluation data sets and select the best-performing model based on metrics like accuracy, AUC, precision, recall, etc.

Operationalization and Monitoring

Deploy the selected model and continuously monitor its performance to detect deterioration over time.

Thus, multiple data science methods and predictive modeling techniques power each of these stages, providing business insights from data patterns that are otherwise non-intuitive.

Key Data Science Methods for Predictive Analysis

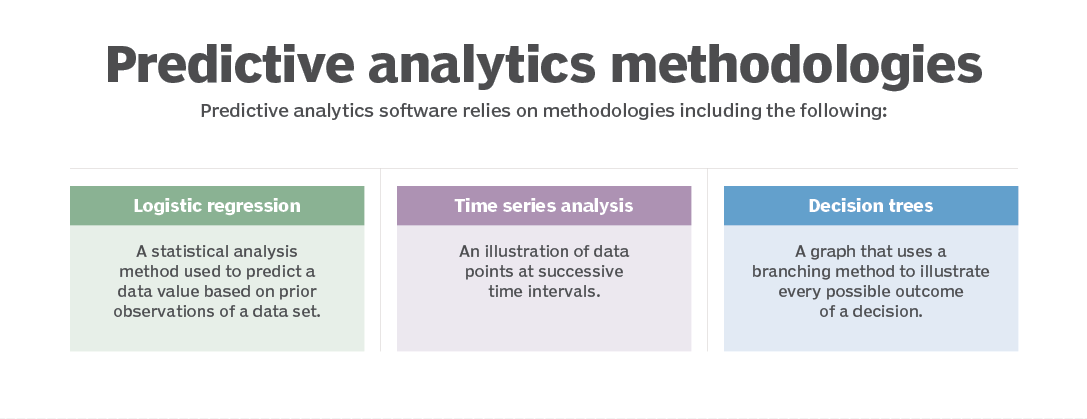

Predictive modeling has its foundation in machine learning and statistical methods. The various techniques differ based on model interpretability, performance metrics optimized for, training data, and problem complexity. Key predictive analytics models include

Linear and Logistic Regression

Regression analysis estimates relationships between dependent (target/outcome) and independent (predictor) variables in data based on correlation and trends. Linear regression fits a straight-line model and predicts a numeric target value. It optimizes to minimize root mean square error between actual and predicted values. Logistic regression predicts categorical or discrete binary target variables like pass/fail, fraud/no-fraud, etc, optimizing log loss. It is interpretable, fast, and avoids overfitting.

On the other hand, Regression models forecast demand, set optimal prices, credit risk analysis, etc., but assume a linear relationship that limits accuracy on complex real-world problems.

Time Series Forecasting

Time series data captures values over sequential time intervals, allowing models to analyze trends and seasonal patterns. Exponential smoothing averages time-weighted historical data applying decay factors. ARIMA models forecast future values from lagged values and past errors using autoregressive terms. RNN deep learning models also power highly accurate time-series forecasts.

Common applications of time series forecasting include – economic forecasting, inventory planning, and energy consumption predictions over weeks, months, and years, leveraging historical periodicity.

Decision Trees

Decision trees split training data recursively based on value thresholds in features following branching logic that mimics human decision-making. Random forests improve accuracy by combining multiple decor-related decision trees, avoiding overfitting. While decision trees are interpretable and non-parametric, they are prone to inaccuracy when dealing with noisy data

Decision rules learned to classify customers, predict equipment failures and estimate insurance risk. Tree depth, pruning, and regularisation prevent overfitting. Differential privacy techniques protect sensitive splitting attribute data.

K-Means Clustering

An unsupervised machine learning technique, k-means clustering finds inherent groups of similar data points in a dataset, assigning them into k clusters. The highly scalable algorithm is versatile, fast, and easier to interpret, but cluster quality depends significantly on initial random cluster center allocation. K-means clustering powers customer segmentation, image compression, document classification, and anomaly detection applications.

Neural Networks

Inspired by biological neuron interconnections, artificial neural networks contain an input layer that feeds into multiple computation hidden layers culminating in an output layer. Weights between neurons are trained based on error through techniques like backpropagation. Deep neural networks find complex nonlinear relationships suited for vision, speech, and language problems.

However, the inner workings of deep neural networks are opaque. Strict rules around bias, security, and ethics are key for business use. Combining neural networks with more understandable methods can balance accuracy and interpretability.

*techtarget.com

Best Practices for Predictive Modeling

While the future is inherently uncertain, continuously enhancing data science methods and testing models against changing real-world conditions improves predictive analytics performance over time. Some key best practices include:

Define Key Business Objectives

As the popular adage says – “If you don’t know where you are going, any model will take you there”. Defining key business metrics and objectives that predictive insights aim to improve ensures tight alignment. Subject matter experts provide the necessary framing and constraints to shape analytics.

Address Data Challenges

As highlighted in an HBR study, poor data quality is the biggest obstacle to effective analytics. Tackling biases, missing values, errors, outliers, concept drift, and integration of unstructured data requires a combination of statistical techniques and data engineering pipelines for trustworthy analytics.

Combine Multiple Techniques

Each family of predictive modeling techniques has relative strengths and limitations. Blending supervised, unsupervised, regression-based, tree-based, clustering, and deep learning algorithms tailors optimally to the problem complexity, interpretability needs, and accuracy-latency tradeoffs.

Focus on Continuous Deployment

The biggest ROI on analytics investments comes from consistently monitoring model performance post-deployment and driving decisions and actions from predictions. Monitoring model metrics over time allows for detecting deterioration due to changing conditions and proactively triggers model rebuilds.

Experiment Constantly

Predictive analytics fundamentally relies on testing empirical techniques on samples first before full adoption. An agile, metrics-driven experimental approach to trying new data sources, features, and algorithms provides the adaptive capacity to maximize value.

Plan for Responsible AI Usage

As predictive models increasingly drive semi-automated or automated decisions using AI across business and society, governance frameworks addressing bias, fairness, transparency, and ethics become vital considerations even with highly accurate models.

Use Cases and Applications of Predictive Analysis

Industry applications using predictive analytics span across verticals delivering immense value:

Banking

Credibility analysis and loan default prediction minimize risk exposure by forecasting borrower repayment capacities using financial transaction history. Clustering algorithms perform customer segmentation for personalized and targeted product recommendations.

Insurance

Predictive actuarial models more accurately estimate policyholder risk levels and future claim liabilities using regression techniques. Premiums are adjusted accordingly, and capital reserves are optimized.

Healthcare

Patient diagnosis, hospital readmission, and disease progression risks are forecast to improve outcomes. Models prescribe personalized treatments optimizing for efficacy. AI assistance also minimizes human diagnostic errors.

Retail

Demand forecasting, inventory optimization, product suggestion, dynamic pricing, and customer lifetime value models all contribute to improved supply chain efficiency and marketing effectiveness. They also help to increase sales conversions by using predictive consumer data.

HR

Predictive workforce analytics guides hiring decisions and forecasts employee turnover risks. With that, it identifies cross-selling opportunities and provides inputs to recommend training interventions, minimizing attrition.

Manufacturing

Predictive maintenance models are scheduled using IoT sensor data to minimize equipment downtime via parts replacement just in time. This helps avoid unnecessary interventions or failures. Production and quality control processes are optimized.

The applications span operational process efficiencies, informed decision-making, and differentiated customer experiences – all catalyzed by unlocking actionable insights from data.

Challenges in Predictive Modeling

While predictive modeling promises great benefits, developing and deploying high-quality models consistently faces some key challenges:

Data Quality and Complexity

Real-world data tends to be disorganized, incomplete, and error-prone. Data collection systems and sources change over time, affecting data quality. Complex unstructured data also provides modeling challenges. Garbage In Garbage Out (GIGO) holds for predictive models. If the input data is flawed, incomplete, or filled with errors (garbage in), then the outputs and predictions of the model will also be flawed and inaccurate (garbage out). High-quality input data is crucial for building effective machine-learning models that generate valid and useful predictions.

Overfitting Models

A model tuned too closely to training data will not perform well when confronted with new data. Overfitting leads to poor real-world performance. You can avoid overfitting by opting for rigorous testing, regularisation techniques, and boosting methods.

Concept Drift

Predictive relationships learned from historical data change over time. External factors cause concept drift, requiring models to be dynamically updated. So, model performance must be continuously monitored to detect concept drift.

Interpretability vs Accuracy

Interpretability is important for business adoption. However, some black-box algorithms like neural networks and deep learning are highly accurate while being un-interpretable. Tradeoffs are often required between model interpretability and predictive accuracy.

Latency and Performance

For time-critical decisions based on real-time data, model prediction latency is important and affects adoption. Simpler models may sacrifice accuracy for lower latency. Optimization, approximation algorithms, and hardware upgrades improve performance.

Conclusion

An effective predictive analytics approach requires testing different techniques to find the right balance of accuracy and interpretability for the problem. Useful techniques to test include regression analysis, time series forecasting, decision trees, clustering algorithms, and neural networks. The models should focus on predicting key business metrics that will provide the most value. By continuously reviewing and refining their models and techniques, organizations can use new data to gain ongoing insights that inform better strategic decisions, which will allow them to improve over time and maintain a competitive edge.

Gaining vital data science skills requires proper training and education. And for that, you must enroll in the Executive Programme in Data Science using Machine Learning & Artificial Intelligence, offered by IIT Delhi. This course equips the participants to leverage AI and machine learning to extract insights from data. Through real-world projects and hands-on learning, you can gain practical skills to drive innovation and growth. Upskill now, to become a leader in AI and analytics.