- jaro education

- 12, April 2024

- 6:36 pm

In data analytics, text analytics is a progressively critical tool for business entities to gain logical insights from unstructured data. Despite vast amounts of unstructured data generated periodically, this data genre is conventionally tricky to analyse. Still, contemporary businesses can extract valuable insights from a given database to propel their decision-making with big data analytics and improve intended business operations.

What is Text Analytics?

Text Analytics amalgamates a set of machine and deep learning models. These models involve linguistic and statistical techniques to process sizable volumes of unstructured text or text needing a preset format to extract patterns and insights. This execution helps businesses, federal authorities, IT researchers, and media houses exploit significant content for decision-making. Text Analytics implements topic modelling, sentiment analysis, term frequency, named entity recognition and even extraction.

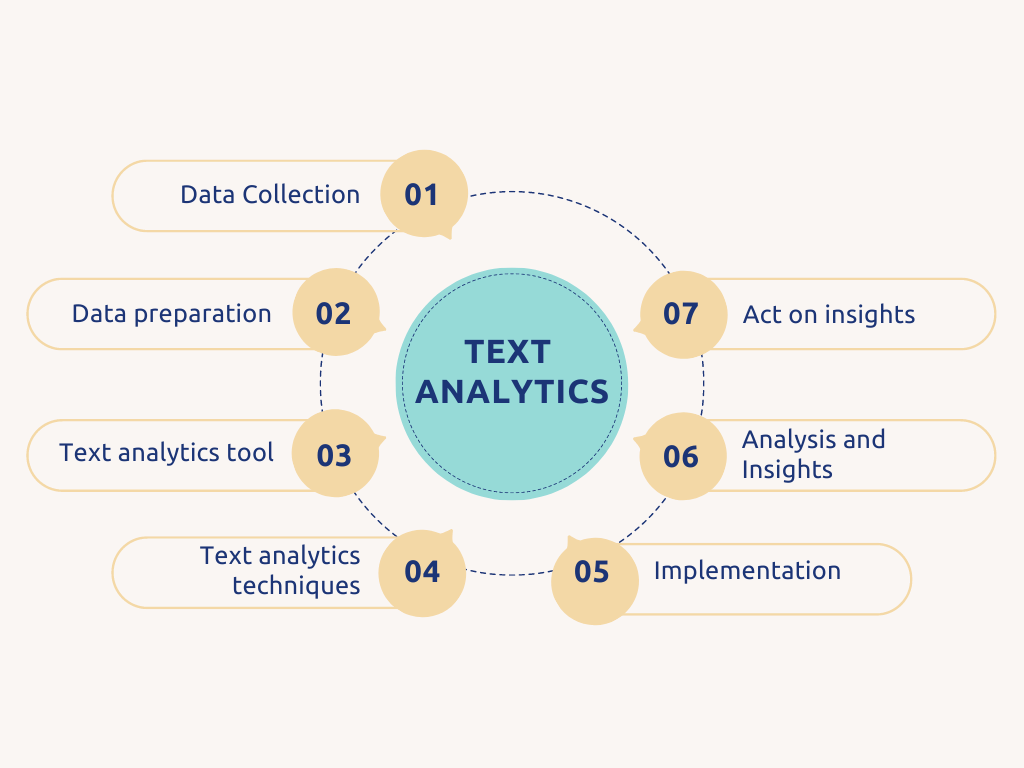

What are the Steps Involved in Text Analytics?

By now, you might have realised that Text Analytics is a complex technique integrating multiple pre-steps to collect and purify unstructured content. Working professionals in the relevant field apply different methods to perform Text Analytics. Following is a set of guidelines concerning a model workflow:

1. Data Collection

Text data combines various content scattered around a business organisation’s internal databases. It includes emails, client chats, product reviews, customer tickets, and Net Promoter Score surveys. Frequent users also derive external data through blog posts, social media posts, news, reviews, and web forum discussions. A company’s internal data is readily available. However, users need to gather the external data.

Table of Contents

*surveypal.com

2. Data Preparation

As the unstructured data is ready to use, it must undergo various preparatory steps before machine learning algorithms and deep learning models can analyse it. This process takes place automatically in most of the Text Analytics software. Text preparation involves several intricate techniques using natural language processing (NLP). These are as follows:

- Tokenization: In this pre-step, the text analysis algorithms break the ongoing string of text data into tokens or minute particles that create the entire phrases or words. To illustrate further, the word may comprise character tokens of each letter, like F-I-S-H, or the user disintegrates by subword tokens, like Fish-ing. Tokens are the signs of all-natural language processing. This step also removes all possibilities of undesirable content in the text, including white spaces.

- Part-of-Speech-Tagging: This step incorporates a few tokens in the data with a grammatical classification, such as verb, noun, adjective, and adverb.

- Parsing: Parsing is the process of comprehending the text’s syntactical framework. Two popular techniques are constituency and dependency parsing, which derive syntactical structure.

- Stemming and Lemmatization: Data processors use these two procedures during data preparation to eliminate the affixes and suffixes linked with the tokens while retaining the Lemma or dictionary form.

- Removal of Stopword: It is a phase of data cleansing when all the tokens have frequent occurrences but are valueless in Text Analytics. It includes the words ‘a’, ‘and’ and ‘the’.

3. Text Analytics

- After performing these pre-steps of preparing the unstructured text data, you can initiate Text Analytics techniques to derive insights. Text Analytics comprises several techniques. Text extraction and text classification are the most highlighted methods.

- Text Extraction: It relates to extracting identifiable and structured information from the unstructured input text content. The information consists of people’s names, keywords, events and places. Regular expressions are one of the straightforward procedures of any text extraction. However, this process becomes complicated to maintain when the intricacy of input data increases. Text extraction also adopts another statistical method known as CRF (Conditional Random Fields). CRF implies a sophisticated but highly effective technology for extracting critical information from unstructured text.

- Text Classification: It also has other names, such as tagging or text categorisation. Users assign particular tags to the text based on its inferences during text classification. For instance, users assign tags like ‘positive’ or ‘negative’ while assessing client reviews. Data professionals frequently classify text using rule-based structure, machine learning, or deep learning-based systems. The rule-based structures humans (users) categorise the linkage between a tag and language pattern. The indicative reviews are simple to guess: “Good” for positive reviews and “Bad” for negative reviews.

Machine learning technology and deep learning models implement historical incidents or training modules to assign a tag to a fresh data set. The training data and the respective volume are essential as a set of data containing big data analytics allows the machine learning algorithms to render precise tagging outcomes. The most prominent algorithms used in text classification are SVM (Support Vector Machines), NB (Naive Bayes) family of algorithms, and deep learning algorithms.

Benefits of Text Analytics

As of early 2024, nearly 5.3 billion people have internet access (about 60% of the global population). Also, around 62% of them are active on social media. The internet generates substantial data every hour through articles, blogs, reviews, tweets, surveys, webinars and forum discussions. Further, most customer interactions and reciprocations have become digital, creating massive text databases that necessitate big data analytics.

However, most text data is unstructured and dispersed around the web. Data professionals can derive significant knowledge if they precisely gather, collate, structure, and analyse it. Commercial entities can capitalise on these insights to take decisive actions that may enhance ROI, customer satisfaction, research and development, and even national security.

The following are some selective text analytics benefits that can help profit and non-profit making entities:

- Text Analytics enables businesses to understand product performance, client trends, and quality of service. It ensures prompt decision-making, improves business intelligence, boosts productivity, and minimises production costs.

- Also, it helps researchers explore the possibilities of a considerable volume of pre-existing literature within a short period. It allows the extraction of exact study materials and faster scientific breakthroughs.

- It assists in interpreting common trends and opinions in the community, empowering government and other statutory bodies in decision-making.

- Text analytics techniques support information retrieval frameworks and search engines to improve performance by providing faster user experiences.

- It fine-tunes content recommendation structures by classifying linked content.

Text Analytics Techniques and Inherent Use Cases

You will find distinctive techniques for analysing unstructured text. Business data professionals use each method for different case scenarios.

1. Sentiment Analysis

Data professionals apply sentiment analysis to pick out emotions delivered by unstructured text. You may find various categories of sentiment analysis. Language proponents also use polarity analysis to determine whether the text expressions imbibe positive or negative sentiments. The classification technique allows for an intensive evaluation: angry, disappointed, perplexed.

Here are a few notable elements of sentiment analysis:

- Computation of client response to a specific product or service

- Understanding trends among the target audience towards a particular brand

- Prioritising new trends in consumer space

- Tracking of the customer sentiment graph over time

- Identifying customer service issues depending on the extent of severity

2. Topic Modelling

Topic modelling is a concept that finds the selected topics or themes in a substantial volume of text or a set of documents. It specifies the keywords used in text content to highlight the article’s subject.

Some use cases of topic modelling are as follows:

- Reputed law agencies implement topic modelling to scrutinise voluminous documents during complex and sizable litigations.

- Modern online digital media uses topic modelling to access trending topics across the search engine.

- Researchers in the IT domain apply topic modelling for exploratory literature evaluation.

- Contemporary businesses can gauge and confirm which products are relevant to the trending market scenario.

- The concept of topic modelling allows anthropologists to influence emergent problems and inclinations in a community based on the content stakeholders share on the web.

3. NER (Named Entity Recognition)

NER infers a Text Analytics method for identifying named entities like human beings, organisations, events, and places in unstructured content. NER extracts noun forms from the unstructured text and ascertains the values of related nouns. The use cases are as follows:

- Professionals use NER to categorise news content based on people, places, and organisations that factor in it.

- Search and recommendation engines apply NER to retrieve desired information.

- Large chain companies use NER to classify client service requests and assign them to a specific place or outlet.

- Healthcare providers and hospitals use NER to automate lab report analysis.

4. TF-IDF

Term Frequency-Inverse Document Frequency (TF-IDF) determines the frequency with which a term appears in a voluminous document text and confirms its significance to the document. This procedure applies an inverse document frequency factor to sort out frequently repeating non-insightful articles, words, prepositions, and conjunctions.

5. Event Extraction

This Text Analytics extraction process supersedes NER. Event extraction acknowledges events included in text content. These events comprise acquisitions, mergers, political moves or important meetings. Event extraction entails an advanced interpretation of the semantics of text content. Up-to-the-minute algorithms perceive events, dates, times, venues, and participants regardless of applicability. This extraction procedure is a gainful technique open to multiple uses across digital business domains. The following are the use cases of event extraction:

- Link Analysis: It is a productive technique for comprehending the meeting of two individuals through social media connectivity. Law enforcement agencies implement link analysis to anticipate and prevent national security threats.

- Geospatial Analysis: When stakeholders extract events with their locations, they use the insights for map overlaying. Thus, it immensely helps geospatial analysis of events.

- Business Risk Monitoring: Large and reputable organisations handle numerous partner companies and vendors. The event extraction features enable businesses to monitor the web to search if the vested suppliers or vendors are handling adverse events such as lawsuits or bankruptcy.

What is Unstructured Data?

Unstructured data relates to any relevant information not arranged in a particular order, scheme, or specific structure that is easily readable by users. It is raw, absolutely disorganised information that usually systematically engulfs the ecosystem. The realm of unstructured data defies traditional deep learning models. By doing this, these databases present distinct business challenges and opportunities. Processing and leveraging unstructured data components demand pertinent, innovative tools like AI-driven OCRs (Optical Character Recognition) that transform disorderly information into a tangible reality of big data analytics.

Examples of Unstructured Data

Unstructured data can be textual, non-textual, artificial (machine-generated) or human. Here are some definite examples of unstructured data:

- Text Data: This term conveys the unstructured (raw) information in emails, word documents, text messages, text files, PDFs, and others.

- Multimedia Messages: Include images (GIF, JPEG, PNG) or audio and video formats. These unstructured messages include complex codes that do not follow a specific pattern. All audio and video files and images can encrypt structureless binary codes, so they fall into the unstructured data classification.

- Web Content: Every website includes information in the form of long, dispersed and disorderly paragraphs. This particular data may contain valuable information but needs to be more beneficial because it requires a more systematic composition.

- Sensor Data – IoT Applications: The IoT (Internet of Things) construes a physical application or device. It gathers information about its surroundings and dispatches the data to the cloud. IoT devices return sensitive sensor data, which are usually unstructured and require refinement. Live instances of the functioning of IoT devices include traffic monitoring applications and voice services such as Alexa, Google Home, and so on.

- Business Documents: Contemporary businesses handle various document categories. Some are emails, invoices, PDFs, customer orders, etc. These documents consist of distinct natures and structures. Companies may apply high-quality intelligent document processing software to extract data from PDFs.

Difference between Structured and Unstructured Data

Computer programmers and data professionals classify data into three categories. Each data genre carries its unique features and prospective applications. These types are:

*lawtomated.com

- Structured Data: This type of data follows a specific schema or format. Users streamline this database to convert it into easily searchable and storable in corresponding databases. It typically contains data that users can enter, preserve or query in a static format SQL databases or spreadsheets.

- Unstructured Data: Unstructured data does not adhere to any defined model or format and is more intricate to manage and comprehend. It is often text-heavy, but you can convert it into images, videos, and other popular media files. This classification is usually available within multimedia content or on social media and remains in a pre-defined state.

- Semi-Structured Data: It is a combination of structured and unstructured data. It virtually has no rigid framework. However, it often possesses tags or identical markers to segregate semantic constituents and impose a hierarchy of records and domains. You can cite instances of XML and JSON files.

Practical Challenges - Unstructured Data

As a working professional, you might have observed that 67% of global commercial or non-commercial organisations work with structured data, while the remaining 33% explore unstructured data. The significant possibility of converting unstructured to structured data stays stagnant due to the practical difficulties of tapping into and processing unstructured data.

The potential hurdles include:

- Storage Demands: As modern business entities progressively prioritise digital capability, unstructured data occupies more space and is more complex to manage and control than structured data. It can undoubtedly trigger storage issues and mess up data management.

- Bulk and Form: Unstructured data contains varied forms and a high volume. As a result, it creates a complex scenario for users to manage. This diversity between text documents and multimedia files is time-consuming for data professionals.

- Extraction Time: These days, when speed becomes synonymous with a competitive edge, the back-breaking process of extracting usable information from unstructured data might be a handicap to timely decision-making and operational efficiency.

- Compromised Data Quality: Searching and sifting through unstructured data to find the expected and relevant information takes a lot of work. The data professionals often compromise with insignificant details that mask valuable insights. It requires adopting robust filtering processes to guarantee data accuracy and effectiveness.

Moreover, AI-driven data extraction applications and deep learning models have made extracting and processing unstructured data more productive, saving time and effort. Modern tools and applications utilised by large company data professionals stand out with their best-in-class OCR proficiency, innovative AI-driven data extraction models, and automated workflows that improve productivity while ensuring precision.

Applications of Unstructured Data in Different Industries

Business enterprises across the globe use unstructured data extraction procedures to make their business documents and add more intelligence to their analytics. Here, you will find examples of how different organisations use intelligent document processing platforms to extract unstructured data and enhance productivity.

- Banks: Banks and other financial institutions use IDP (Internal Development Platform) to extract insights from raw databases such as customer forms, claims, call records, KYC documents, financial reports and so on.

- Insurance: The insurance sector experiences stern regulations from competent authorities. It must undergo documentation and verification at every step of the claim process. Insurance agencies use automated document processing platforms to discharge claims, perform risk management, and other rule-based functions.

Insurance claim processes contain a substantial volume of unstructured data. Data professionals working for insurance companies extract unstructured data using AI-powered platforms. It makes the claim process straightforward and enables selective data extraction from PDFs, images, audio, and videos.

- Real Estate: The companies and agencies in the purview of real estate deal with the masses in one go. The stakeholders include customers, tenants, builders, property owners, competitors and vendors. Implementing automated document processing software can ideally help real estate companies create impressive profiles of the side stakeholders. The software will also streamline the data extraction from unstructured data sources like contracts, rent leases, property valuation papers, etc.

- Health Sector: If hospitals or health care units want to provide an exclusive patient experience, minimise wait times, and ensure ideal working hours for in-house staff, they must follow big data analytics like the IDP platform. This way, they can extract insights from unstructured data sources such as customer voices, EHRs, patient surveys, customer complaints, literature reviews and regulatory websites. It helps healthcare professionals and management to confirm a superior patient experience.

Conclusion

Now that you know your requirements, it becomes simple for you as a data professional to transform unstructured data into practical, well-directed information. It gives you the intelligence to resolve your targeted issues and lets your managers make robust and informed business decisions.

To learn text analytics with the guidance of industry experts, join the Post Graduate Certification Programme in Business Analytics & Applications course conducted by IIM Tiruchirappalli. It is a 13-month programme module that provides attractive opportunities for peer networking. Participants, such as working professionals in business analytics in reputed companies, will find it an ideal platform to hone their skills for career growth. After completing this course, you will be able to derive meaningful insights from unstructured data that will improve decision-making., effectively and seamlessly.