- jaro education

- 27, February 2024

- 10:00 am

Machine learning functions as the brain of AI systems, assisting them in completing tasks by predicting outcomes based on information received. Think of it as a super-smart assistant! It performs two primary functions: classification (grouping things) and regression (predicting outcomes). Did you know AI and machine learning together could create a whopping $33 trillion value every year by 2025? That’s a lot of value!

So, what’s the deal with machine learning algorithms? They’re like math wizards in the AI world. They learn from data, figure out patterns, and predict what might happen next. Data experts help them learn by giving them training data. It’s like training a superhero – the more they train, the better they get at making decisions and giving the right answers.

The big deal about these algorithms is that they’re super good at going through tons of data really quickly. It’s like having a super-speedy helper who can look at lots of information and tell us useful stuff way faster than we can. These clever machine-learning algorithms can find hidden patterns, make predictions, and get better at their jobs over time. They handle all kinds of tasks, from predicting stock market changes using simple maths to sorting things out with a method called K-Nearest Neighbors. To truly harness the power of these algorithms and delve into the world of data science, consider enrolling in Executive Programme in Data Science using Machine Learning & Artificial Intelligence, by IIT Delhi.

Table of Contents

This blog explores the top 15 machine learning algorithms that everyone’s talking about. By the end, you’ll have a clear picture of the different machine-learning tools and how they’re making our tech world even more exciting!

Top 15 Types of Machine Learning Algorithms

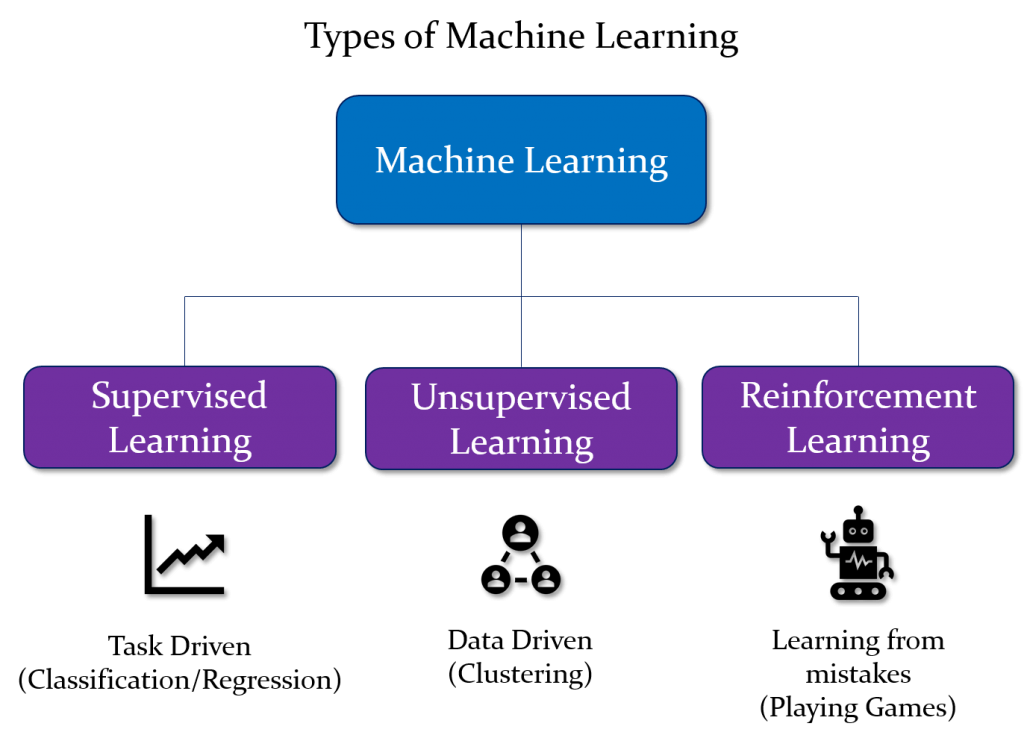

Machine learning algorithms are divided into three categories based on their learning approach: supervised learning, unsupervised learning, and reinforcement learning. The following list includes the top 15 algorithms in these three categories.

*newtechdojo.com

Supervised Learning Algorithms

1. Linear Regression

Linear regression, a fundamental concept in supervised machine learning, reveals the linear relationship between a dependent variable and one or more independent features. Whether used in Univariate Linear Regression with a single feature or Multivariate Linear Regression with multiple features, its interpretability is outstanding. The model’s equation clarifies the impact of each independent variable, promoting a thorough understanding of data dynamics.

Linear regression serves as the foundation for advanced models such as regularization and support vector machines, in addition to its predictive capabilities. It also helps researchers validate key assumptions about the dataset. Linear Regression is a fundamental concept with wide-ranging applications in machine learning due to its simplicity, transparency, and interpretability.

2. Logistic Regression

Logistic Regression, a popular Supervised Learning algorithm, excels at predicting categorical outcomes from sets of independent variables. Unlike Linear Regression, which focuses on regression problems, Logistic Regression is designed for classification tasks, assigning probabilities from 0 to 1. Its distinctive “S”-shaped logistic function helps predict binary outcomes in mice, such as the presence of cancer or obesity.

Logistic Regression excels at generating probabilities and classifying new data from both continuous and discrete datasets. Its strength lies in accurately categorizing observations and identifying influential variables for classification, as demonstrated by the concise visual representation of the logistic function.

3. Decision Trees

The Decision Tree, a versatile Supervised Learning method, excels at both classification and regression problem solving, with a bias toward classification. This tree-like structure has internal nodes for dataset features, branches for decision rules, and leaf nodes for results. Decision nodes with multiple branches make decisions based on feature tests. Using the CART algorithm, it begins at a root node and grows like a tree.

5. Random Forest

The Random Forest Algorithm is well-known for its versatility, particularly in classification and regression tasks. Its user-friendliness and adaptability make it a popular choice for machine learning. Notably, it handles complex datasets efficiently, reducing overfitting concerns. Its ability to manage both continuous and categorical variables distinguishes it, as evidenced by superior performance in a variety of predictive tasks.

6. Support Vector Machines (SVM)

Support Vector Machine (SVM) is a highly adaptable algorithm within Supervised Learning, primarily used for classification in Machine Learning. Its primary goal is to create an optimal hyperplane, which effectively partitions n-dimensional space into well-defined categories. SVM creates strong decision boundaries by identifying critical extreme points, known as support vectors, demonstrating its ability to create accurate classifications for a wide range of dataset types.

7. K-Nearest Neighbors (KNN)

K-Nearest Neighbors (KNN) is a simple but effective machine learning algorithm used primarily for classification. It classifies new data by comparing it to existing instances and assigning it to the category with the most similar features. As a non-parametric, lazy learner, KNN saves the entire dataset during training and classifies new data in real-time based on its similarity to previously stored instances. This adaptable approach makes KNN useful for both regression and classification tasks, providing simplicity and efficiency in supervised learning.

Unsupervised Learning Algorithms

1. K-Means Clustering

K-means Clustering is a fundamental concept in unsupervised machine learning, in which algorithms organize unlabeled data using parallels and patterns. The goal is to categorize data points into groups so that similarities are maximized within each group and differences are highlighted between groups. This process enables structured analysis of data patterns without prior training, resulting in insights and understanding in the absence of explicit guidance.

2. Hierarchical Clustering

Hierarchical clustering employs two main techniques: agglomerative, which merges data points from the bottom up into clusters, and divisive, which divides a single cluster iteratively. In contrast to K-Means Clustering, which requires a predetermined cluster count and frequently produces uniformly sized clusters, hierarchical clustering is more versatile.

It eliminates the need for prior knowledge of the cluster count, which is useful when the information is uncertain. This flexibility overcomes the challenges associated with fixed cluster counts and uniform cluster sizes in alternative clustering algorithms, establishing hierarchical clustering as the preferred choice across a wide range of applications.

3. Principal Component Analysis (PCA)

Principal Component Analysis (PCA) is an important unsupervised learning technique for dimensionality reduction in large datasets. Overfitting in machine learning models is common with high-dimensional data, highlighting the importance of dimensionality reduction techniques before model creation.

PCA excels at increasing interpretability while minimizing information loss, thereby assisting in the identification of important features. This technique converts the original variables into principal components, such as PC1 and PC2, in order to capture the maximum amount of data variance. Understanding dimensionality is critical, and PCA reduces dataset dimensionality, which improves visualization and simplifies trend identification.

Reinforcement Learning Algorithms

1. Q-Learning

Q-learning, an off-policy algorithm, identifies optimal actions based on an agent’s current state. In contrast to model-based approaches, it focuses on maximizing future rewards and learning through experience rather than explicitly defined functions. It uses a value-based method to train a function to evaluate state value.

In off-policy mode, Q-learning evaluates and updates a specific policy, whereas policy-based methods directly train action policies. This adaptable and concise methodology emphasizes Q-learning’s effectiveness, making it suitable for a variety of applications.

2. Deep Q Network (DQN)

Deep Q-Network (DQN), a robust reinforcement learning algorithm, combines deep neural networks and Q-learning to help agents acquire optimal policies in complex environments.

DQN uses a deep neural network to represent states, experience replay for memory, Q-learning to update, a balance of exploration and exploitation, and a target network to ensure stability. This cyclic refinement process fine-tunes the policy until it reaches convergence, demonstrating DQN’s effectiveness in handling complex learning scenarios.

3. Policy Gradient Methods

The goal of Reinforcement Learning (RL) is to find the optimal policy for maximizing rewards. Policy gradient methods, which fall under policy iteration, are used to directly model and optimize policies. These algorithms perform well in a model-free RL context, indicating a lack of prior knowledge about the environment’s model or transition probabilities. This adaptability is useful in environments with unknown information, emphasizing the direct manipulation of policies to achieve the best policy and maximize expected returns.

4. Actor-Critic Methods

Actor-critic, a pivotal reinforcement learning algorithm, uses two networks: the actor makes decisions, and the critic evaluates them using the value function to guide adjustments. As a temporal difference iteration of the policy gradient approach, the actor’s learning is policy gradient-based, resulting in optimal actions.

Actor-critic, which is widely used in Reinforcement Learning, excels at decision-making in a variety of artificial intelligence applications by maintaining a dynamic equilibrium between action determination and evaluation for efficient learning and adaptation.

Other Effective Algorithms

1. Neural Networks

Neural networks, modeled after the human brain, are made up of interconnected layers of nodes (input, hidden, and output). Their non-linear nature makes them useful for modeling complex relationships, which is used in tasks such as pattern recognition, functional approximation, and data processing. Notable benefits include increased resilience to information loss, simplified post-training analysis, and improved accuracy with high-quality datasets.

2. Randomized Algorithms

Randomized Algorithms leverage randomness to find a harmony between efficiency and accuracy in problem-solving. By introducing randomness into the algorithmic procedures, these approaches seek quicker computations and offer approximate solutions. They excel in scenarios where exact solutions are computationally costly or unfeasible, proving valuable in situations where striking a balance between precision and computational resources is advantageous.

3. Genetic Algorithms

Genetic Algorithms are inspired by nature’s natural selection principles to tackle optimization and search challenges. Emulating the evolutionary process, these algorithms engage in generating, selecting, and evolving potential solutions. They seek optimal or near-optimal solutions to intricate problems by employing processes like mutation, crossover, and selection. Through iterative refinement across successive generations, Genetic Algorithms efficiently navigate expansive solution spaces, offering effective solutions in domains where conventional optimization methods face difficulties.

Conclusion

The rapid evolution of machine learning necessitates that aspiring data scientists and enthusiasts keep up with the latest algorithms. It is critical to recognize that there is no one-size-fits-all algorithm; each has unique strengths and weaknesses. The most important skill is selecting the right tool for the job at hand. Continuous learning and adaptation are critical as the field advances, given the frequent emergence of new algorithms and methodologies.

The effective navigation through the complexities of machine learning, enrolling in the “Executive Programme in Data Science using Machine Learning & Artificial Intelligence” by CEP, IIT Delhi, is a strategic choice. This course is crafted to offer participants an in-depth grasp of recent advancements, ensuring they acquire the knowledge and skills essential for success in machine learning.

In 2024, these algorithms are more than mere tools; they are the driving force propelling AI into uncharted territories. They symbolize the limitless potential for innovation and problem-solving. As their capabilities unfold, the tech landscape becomes more dynamic and promising, paving the way for transformative advancements in artificial intelligence.