- jaro education

- 4, January 2024

- 9:00 am

Organizations are using new technologies that will help leaders make informed decisions. Among these new technologies, deep learning is worthy of mention. But what is deep learning? It is a type of machine learning that imitates humans’ way of thinking using different algorithms. It helps companies build intelligent systems with the help of artificial neural networks to solve complex problems. Deep learning has become so popular that many companies implement it in their businesses. However, data scientists face difficulty in implementing deep learning with Neural Networks. First, they need to simplify the complex algorithms and then justify the result of the models. Data scientists thus prefer using visualization to build Neural Networks and thereby create deep learning with Python.

It is not a simple task to create deep learning and Neural Networks without advanced skills in Data Science. If you’re interested in building Neural Networks and deep learning for businesses with Python codes, CEP IIT Delhi offers an Executive Program in Applied Data Science using Machine Learning and Artificial Intelligence. In this program, you can learn to use Data Science principles and Business Analytics using AI techniques and machine learning algorithms and take a leap in your career as a value-adding professional.

How to Explain Deep Learning?

Table of Contents

Deep learning is popular among data scientists, and it is used in various organizations due to the recent developments in data availability, processing capability, and algorithms. It means training a Neural Network. But what is a Neural Network? Simply put, it is a function that fits some data. The function can be anything, such as a linear or sigmoid function. A single neuron has no benefit over a standard machine learning method. As a result, a neural network integrates many neurons. Consider neurons to be the building components of a neural network. You may create a neural network by stacking them.

Neural Network and its Layers

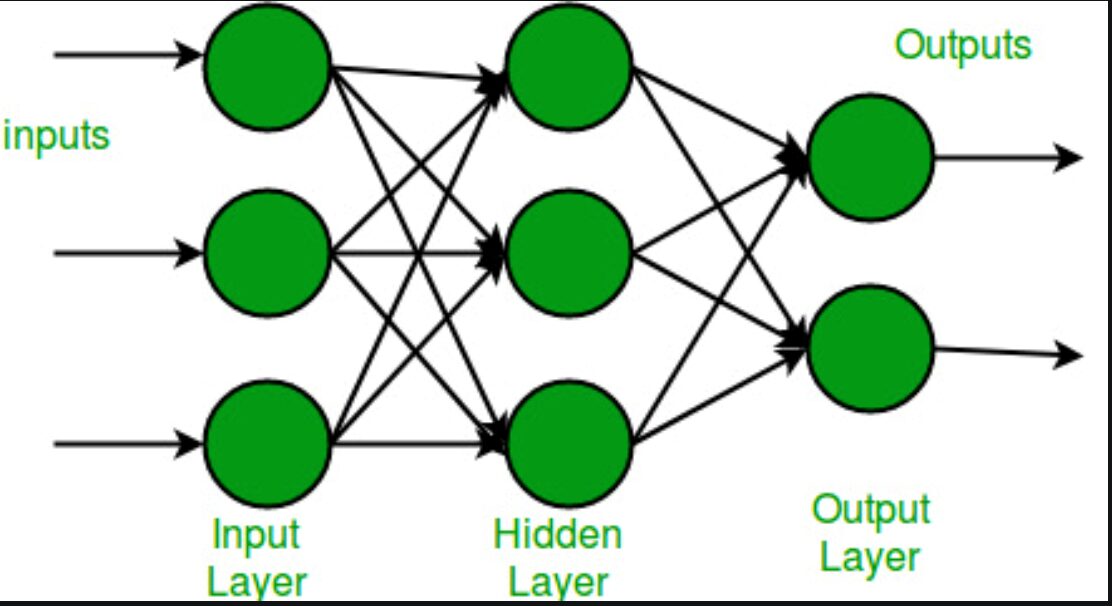

A Neural Network is a machine designed to imitate the human brain. Each layer of a Neural Network is made up of nodes. The node connections represent the flow of information from one layer to the next. The neurons are linked together via weights. It then sends the information to a neuron. Following that, it processes the input and produces a result.

A Neural Network comprises three main layers.

*geeksforgeeks

- Input Layer: The layer that accepts all inputs.

- Hidden Layer: This layer examines the incoming data for hidden information and extracts features.

- Output Layer: The layer that provides the desired output.

What are the Functions of a Neural Network?

The main function of a neural network is the activation function. The activation function determines whether or not to stimulate a neuron by computing the weighted sum and then adding bias to it. The activation function’s objective is to induce non-linearity into a neuron’s output.

We know that neurons in the neural network work by weight, bias, and their respective activation function. In a neural network, we would change the weights and biases of the neurons based on the output error. This is referred to as back-propagation. Because the gradients are given simultaneously with the error to update the weights and biases, activation functions enable back-propagation.

Without an activation function, a neural network is just a linear regression model. The activation function transforms the input nonlinearly, allowing it to learn and accomplish more difficult tasks.

Why is Deep Learning Needed to Create Neural Networks?

Digital activity has increased substantially in recent years, creating massive volumes of data. While the effectiveness of traditional machine learning methods will decrease as data utilization increases, large neural networks’ performance will rise as more data is supplied. Data storage has become increasingly inexpensive in recent years, and processing power now allows for the training of such huge neural networks. This is the reason why every company wants to implement deep learning with Python.

In several learning settings, you can apply deep learning methods. For online advertising purposes, traditional neural networks are applied. For photo tagging, convolutional neural networks (CNN) are applied, and for machine translation and speech recognition, recurrent neural networks (RNN) are used.

As an aspiring data scientist, if you want to build your own neural network, use deep learning with Python.

How to Build Neural Networks from Scratch?

Neural networks are the essential tools of deep learning. With adequate data and computer capacity, they can tackle the majority of deep learning issues. It is fairly simple to design a neural network and train it on any dataset using a Python or R package.

Creating an output from input data is the first stage in developing a neural network. You can do this by calculating a weighted sum of the variables. The first step is to use Python and NumPy to represent the inputs.

Use NumPy to Represent the Inputs of the Neural Network

You can use NumPy to represent the network’s input vectors as arrays. However, before using NumPy, it’s a good idea to experiment with vectors in pure Python to better grasp what’s going on.

Say you have an input vector and two weight vectors. The aim is to determine which of the weights is closest to the input in terms of direction and magnitude. And how do you do that in Python?

First, you create three vectors: one for the input and two for the weights. Then, you compute the similarity between input_vector and weights_1. You will use the dot product to do this. Because all of the vectors are two-dimensional, the stages are as follows:

- Multiply the first index of input_vector by the first index of weights_1.

- Add the results of the two multiplications.

To follow along, you can use the IPython or Jupyter Notebook. Whenever you start a new Python project, it is ideal to build a new virtual environment. venv is included with Python versions 3.3 and above and is useful for building a virtual environment:

$ python -m venv ~/.my-env

$ source ~/.my-env/bin/activate

Use the above-mentioned codes to create a virtual environment and activate it. It’s now time to use pip to install the IPython terminal. Because you’ll also require NumPy and Matplotlib, you should install those as well:

(my-env) $ python -m pip install ipython numpy matplotlib

(my-env) $ ipython

You’re now ready to start coding. The following code computes the dot product of input_vector and weights_1:

In [1]: input_vector = [1.72, 1.23]

In [2]: weights_1 = [1.26, 0]

In [3]: weights_2 = [2.17, 0.32]

In [4]: # Computing the dot product of input_vector and weights_1

In [5]: first_indexes_mult = input_vector[0] * weights_1[0]

In [6]: second_indexes_mult = input_vector[1] * weights_1[1]

In [7]: dot_product_1 = first_indexes_mult + second_indexes_mult

In [8]: print(f"The dot product is: {dot_product_1}")

Out[8]: The dot product is: 2.1672

2.1672 is the outcome of the dot product. Now that you know how to compute the dot product, you may utilize NumPy’s np.dot() function. Here’s how to use np.dot() to compute dot_product_1:

In [9]: import numpy as np

In [10]: dot_product_1 = np.dot(input_vector, weights_1)

In [11]: print(f"The dot product is: {dot_product_1}")

Out[11]: The dot product is: 2.1672

np.dot() performs the same function as previously, but now you must supply the two arrays as inputs. Let us now calculate the dot product of input_vector and weights_2:

In [10]: dot_product_2 = np.dot(input_vector, weights_2)

In [11]: print(f"The dot product is: {dot_product_2}")

Out[11]: The dot product is: 4.1259

The answer this time is 4.1259. You may think of the similarity between the vector coordinates as an on-off switch when considering the dot product. If the multiplication result is 0, the coordinates are said to be unrelated. If the result is more than zero, you may conclude they are comparable.

You may think of the dot product as a rough assessment of vector similarity. When the multiplication result is 0, the final dot product will be lower. Returning to the sample vectors, because the dot product of input_vector and weights_2 is 4.1259, and 4.1259 is bigger than 2.1672, input_vector is more similar to weights_2. This identical method will be used in your neural network.

To see whether the neural network is working, you need to test it by making predictions that will have only two possible outcomes. The outcome might be either 0 or 1. This is a segmentation problem, a subset of supervised learning problems in which you have inputs and known goals in a dataset. The dataset’s inputs and outputs are as follows:

| Input Vector | Target |

|---|---|

| [1.66, 1.56] | 1 |

| [2, 1.5] | 0 |

The variable you wish to forecast is the target. In this example, you’re working with a dataset of numbers. This is unusual in a real-world manufacturing setting. When a deep learning model is required, the data is often given in files such as photos or text.

Make Your First Prediction

Since you are building a neural network for the first time, constructing the network with two layers will suffice. So far, you’ve seen that the neural network’s only two operations were the dot product and the sum. They are both linear procedures.

If you continue using only linear operations, adding more layers has little effect since each layer will always have some connection with the preceding layer’s input. This indicates that for every network with several levels, a network with fewer layers will always predict the same results. Your goal will be to find an operation that causes the intermediary layers to sometimes correlate with an input and sometimes not. To achieve this behaviour, use nonlinear functions, i.e., activation functions. As mentioned above, there are various types of activation functions. For example, the ReLU (rectified linear unit) function transforms all negative values to zero. This means the network can “turn off” a negative weight, introducing nonlinearity.

The sigmoid activation function will be used in the network you’re creating. It will be used in the last layer, layer_2. The dataset has just two potential outputs: 0 and 1, and the sigmoid function limits the output to a range between 0 and 1. The formula of this function is:

S(x) = 1/(1 + e−x)

The e is a mathematical constant known as Euler’s number, and ex may be calculated using np.exp(x).

Probability functions calculate the likelihood of occurrence for various event outcomes. The dataset has just two potential outcomes: 0 and 1, and the Bernoulli distribution has two possible outcomes as well. If your problem follows the Bernoulli distribution, the sigmoid function is an excellent choice, which is why you’re employing it in the last layer of your neural network.

Because the function’s output is limited to a range of 0 to 1, you’ll use it to anticipate probabilities. If the output is more than 0.5, the forecast is one. If it is less than 0.5, the forecast is zero.

In [12]: # Wrapping the vectors in NumPy arrays

In [13]: input_vector = np.array([1.66, 1.56])

In [14]: weights_1 = np.array([1.45, -0.66])

In [15]: bias = np.array([0.0])

In [16]: def sigmoid(x):

...: return 1 / (1 + np.exp(-x))

In [17]: def make_prediction(input_vector, weights, bias):

...: layer_1 = np.dot(input_vector, weights) + bias

...: layer_2 = sigmoid(layer_1)

...: return layer_2

In [18]: prediction = make_prediction(input_vector, weights_1, bias)

In [19]: print(f"The prediction result is: {prediction}")

Out[19]: The prediction result is: [0.7985731]

Because the initial predicted result is greater than 0.5, the output is 1. The network is correctly predicted. Try it again with a different input vector, np.array([2, 1.5]). For this input, the correct answer is 0. You will only need to alter the input_vector variable since all of the other arguments stay unchanged.

In [20]: # Changing the value of input_vector

In [21]: input_vector = np.array([2, 1.5])

In [22]: prediction = make_prediction(input_vector, weights_1, bias)

In [23]: print(f"The prediction result is: {prediction}")

Out[23]: The prediction result is: [0.87101915]

This time, the network predicted incorrectly. Because the objective for this input is 0, the result should be less than 0.5, yet the raw value was 0.87

Final Thoughts

The power of deep learning and the buzz to create different neural networks for varied companies are increasing day by day. These technologies are used to build intelligent systems that have the capacity to solve complex problems. If deep learning, AI, and data science are your areas of interest, consider doing an Executive Program in Applied Data Science using Machine Learning & Artificial Intelligence from CEP, IIT Delhi. With this programme, professionals can easily access this program as online classes happen on weekends or after business hours. It promotes peer-to-peer learning and offers mentorship from industry experts. It can be your ladder to uplift your career with skills and knowledge of ML and AI.