Markov Chain Analysis: The Backbone of Data Science

Table of Contents

- jaro education

- 10, November 2024

- 10:00 am

Let’s understand this – we all use Google Maps to plan our commute. We check current traffic to predict delays and choose the fastest route. This method or procedure is called Markov’s analysis. Inspired by Andrey Markov’s work, this analysis forecasts the future using current information. The major uses of such analysis are in predicting market shifts or understanding asset performance. In fact, Markov analysis plays an important role in making strategic financial decisions and maximising investment strategies. So, if you’re curious to understand the real picture behind Markov analysis, this blog is for you. Here, we have explained what Markov analysis is, its type, its properties, and its Advantages and Disadvantages.

Let’s get started!

What is Markov Chain?

A Markov Chain is a type of mathematical system that helps to understand the probability connected with a sequence of events occurring based on the state of the previous event. Simply put, every transition in a state depends only on the current state and is independent of past states—a principle known as the “stateless” or “memoryless” property. In other words, the future state is unaffected by the past unless the present state is considered. The theory of Markov Chains in operations research is named after the Russian scholar Andrey Markov, who developed the concept in 1906.

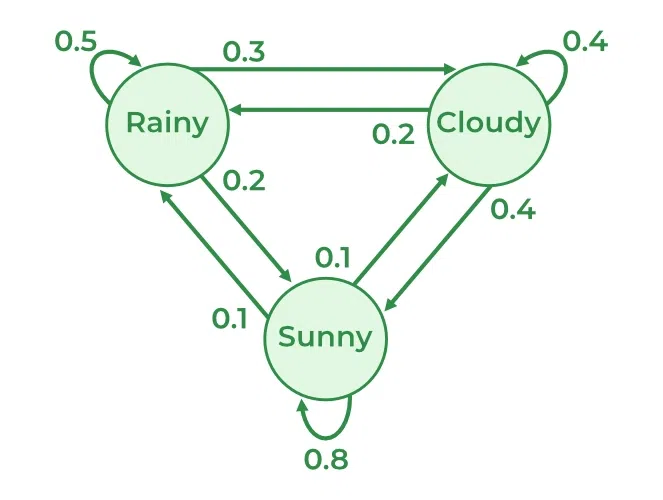

An example of a Markov Chain can be found everywhere, proving useful not only in data analysis but also in economics, machine learning, and social platforms, as it enhances the modelling of mathematical processes. In data science, Markov Chains assists in sequencing web page navigation, predicting meteorological conditions, and natural language processing (NLP).

*geeksforgeeks.org

Types of Markov Chains

Depending on the properties, Markov Chains can be distinguished by the absence/presence of the absorbing states, the presence of the stationary distribution, and the periodicity of transitions. Here are the main types:

Discrete-Time Markov Chains (DTMC)

These are Markov chains in which the change between states takes place at discrete time intervals. This transition possibilities are determined by a transition matrix. For example – the state of a discrete-time Markov chain (DTMC) might represent the weather conditions on a specific day (e.g., sunny, cloudy, or rainy), with the transitions showing how the weather shifts from one day to another.

Continuous-Time Markov Chains (CTMC)

When the transition between states occurs at any time, it is said to be a Continuous-Time Markov Chain (CTMC). In this scenario, the transition possibilities are determined by the rate function and do not focus on probabilities. A great example of this is the number of customers in a shop at a given time. The change between states shows the arrival and departure of customers.

Absorbing Markov Chains

An analysis in Marko Chain is said to absorb when there is no way to leave that state. In simple words, in this state, there are no outgoing transitions. For instance, the endpoint of a game can be an absorbing state- the state of winning.

Reversible Markov Chains

Reversible chains imply that to proceed from one state to the other and time forward is the same as proceeding backwards. These are usually used in Monte Carlo simulations.

Properties of Markov Chains

Here’s an overview of the properties of Markov Chains that make them applicable in various domains:

Memorylessness (Markov Property)

As mentioned above, the future state is predicted from the present state alone in future state modelling. This makes the modelling process easier, especially for behaviours with large state spaces.

Transition Matrix

A transition matrix typically describes the states or probabilities of a Markov Chain where an element of the matrix defines the probabilities of transition. The probabilities within each row of this matrix total up to 1, such that one state transition will happen.

Stationary Distribution

Many of these Markov Chains have an ergodic property within which the probability of being in a certain state does not change with time. That is helpful for constructing equilibrium configurations for different problems.

Irreducibility

In the context of a mathematical process for a Markov Chain, it is irreducible that you can reach any state from another state in finite steps.

Recurrence and Transience

Recurring states are those that a system will always return to, while transient states are those that the system never returns to once the system is out of the state.

Advantages and Disadvantages of Markov Chains

Here are the pros and cons of Markov Chains you should know about.

| Advantages | Disadvantages |

|---|---|

| Simplification of Complex Systems: Markov chains make it easy to analyse different complex systems since they merely aim at analysing the current state. For example, the resulting computations can become tough to manage in genetics, where gene sequences are the states when modelling stock prices where previous states are recorded. However, Markov Chains eliminates this by only considering the current state for transitions, thereby making the problem easier. | Limited Applicability: The use of Markov property might not serve actual systems well since history or external events impact state transitions. |

| Wide Range of Applications: Markov Chains are useful and can be used in various disciplines like data sciences, genetics, finance and even social sciences. In finance, they forecast shifts in the market, while in biology, they simulate the actions of genes in certain sequences. Their flexibility is due to their capability to model systems where the future is unrelated to the past, making them suitable for a broad range of applications such as NLP, web page ranking, and weather forecasting, among others. | Complexity in Large Systems: Although Markov Chains make smaller systems easier, applying the same in larger or highly complex systems with many states proves to be demanding and expensive. |

| Prediction of Long-Term Behaviour: Markov Chains can identify the continuous pattern of a system and its future behaviour through a steady-state probability distribution. For instance, in web mining, they estimate how users browse through particular websites. while in epidemiology, they can simulate disease transmission by calculating the likelihood of moving back to specific states (e.g., recovery or reinfection). These steady-state distributions provide predictable information about future behaviours and are used to determine equilibriums within system states. | Difficulty in Parameter Estimation: Computing transition probabilities in real-life systems, particularly in continuous-time models, can be more challenging, especially due to data constraints. |

| Efficiency for Computational Algorithms: Markov Chains are mostly useful when it comes to algorithms like Markov Chain Monte Carlo (MCMC), which is used in many learning and Bayesian statistics. MCMC uses Markov Chains for random sampling from a vessel that is a probability distribution with issues with optimisation and integration. |

Applications of Markov Chains in Various Fields

A Markov chain is a system of random variables that evolve from one state to another based on a set of probabilities. This characteristic—specifically, that the future state depends solely on the current state—makes Markov chains suitable for applications in numerous domains. Here, we will consider some important applications of Markov chains in various industries, including financial systems, self-driving vehicles, and biological systems.

*fastercapital.com

Finance

- Technical analysis of stock prices and investment programs in the financial field can be analysed with the help of Markov chains. By dividing price changes into distinct states (although they are called price buckets), analysts adjust for fluctuations in volatility. Such an approach helps them conduct Monte Carlo simulations, which assist in determining the value of certain assets or the best portfolio mix.

- Successful integration of decision-making and domain knowledge from specialists in the field of finance significantly improves prediction quality. This integration makes it easier for analysts to adapt to changing conditions in the stock market.

- The advanced Markov chains also help portfolio managers study interactions between different assets and measure requirements for risks connected with these interactions. It is indeed a capability for decision-making under uncertainty because the decisions made help to enhance investment strategies.

Autonomous Systems

In autonomous systems, the Markov chains are crucial for creating reinforcement learning algorithms.

- They employ states, actions and transition probabilities in order to imitate experience and allow systems to establish policies that are likely to yield the highest possible expected returns.

- For instance, their application in robotics is highly efficient for evaluating some state-action paths to determine the most appropriate actions based on previous occurrences.

Bioinformatics

In autonomous systems, the Markov chains are crucial for creating reinforcement learning algorithms.

- They employ states, actions and transition probabilities in order to imitate experience and allow systems to establish policies that are likely to yield the highest possible expected returns.

- For instance, their application in robotics is highly efficient for evaluating some state-action paths to determine the most appropriate actions based on previous occurrences.

How Jaro Helps to Achieve Your Goals

Jaro Education is among the top players in the online higher education and upskilling company, assisting students in enrolling in the IITM Pravartak’s Executive Certification in Advanced Data Science Applications programme. Beyond this, we are also a marketing and technology partner. We have a team of skilled professionals who are always on their toes to provide career counselling and support to each individual who wants to build a successful career in data science. Trust us – we will take you on the right path.

Furthermore, if you are enrolling on this Programme with us, you can get Jaro Expedite – Career Booster benefits.

- Profile Building: From scrutinising LinkedIn profiles to resume building, we ensure that we give personalised assistance to enhance every individual’s virtual presence.

- Resume Review: Thoroughly scan your resume to make sure that you are ready for the interview.

- Placement Assistance: We provide career counselling and support as per the requirements. We support by recommending jobs and enhancing employment opportunities.

- Career Enhancement Sessions: Bridging connectivity to link the best talent with organisations through eminent sessions from top-class industry speakers.

IITM Pravartak’s Executive Certification in Advanced Data Science Applications

IITM Pravartak Technologies Foundation is a section 08 Company controlling the IT hub on Sensors, Actuators, and Networking. It aims to provide knowledge in SNACS through substantial and application-oriented searches.

IITM Pravartak’s Executive Certification in Advanced Data Science Applications Programme is mainly designed to develop data science proficiency among learners. It focuses on building your skills using core techniques in data science, artificial intelligence, and machine learning. Furthermore, this programme teaches you about the essential methods and concepts that increase your critical thinking in various fields like development, software engineering, etc. IITM Pravartak’s Executive Certification in Advanced Data Science & Applications offers you contextual know-how using case studies from various business verticals.

Here’s a breakdown of the key aspects of this course:

-

- Institution: IIT Madras Pravartak, in exclusive partnership with Jaro Education

- Course Structure: A Programme that spans 10 months, consisting of online distance and fixed campus classes.

- Key Topics: Data Analytics, Machine Learning, Deep Learning, Predictive Modeling

- Certifications: IITM Pravartak certificate on the completion

- Career Support: Job placements, internships, and career fairs concerning experts in the field.

Final Thoughts

Markov Chain analysis remains among the core subjects of growing fields known as data science.

It opens the door to the capability of a range of applications across the field, Including decision-making where multiple stages are involved and making predictions is difficult. The right kind of education, as provided by IITM Pravartak’s Executive Certification in Advanced Data Science Applications, in the right areas of the skill set can go a long way in ensuring that you get the best out of this powerful tool for change in your career.

Frequently Asked Questions

Markov chain is a mathematical system that is used for state transition probabilities and does not depend on past states. It is widely used in data science to predict sequences and behaviours.

The main types include Discrete Time, Continuous Time, Absorbing MCs, Ergodic MCs and MCs with Reversibility.

In ML, Markov chains are used in NLP, economic modelling, and even to analyse website visitors’ behaviour.

The Markov property implies that the future state only depends on the current state. Therefore, it can be very easy to model a process where past information cannot be of any importance.

Markov Chains make complex decisions easy, can be applied in any field, and are a component in the MCMC technique used in data science and machine learning.