Complete Guide to Artificial Neural Networks and Its Applications

Table of Contents

- jaro education

- 15, May 2024

- 10:10 am

Definition of Artificial Neural Networks

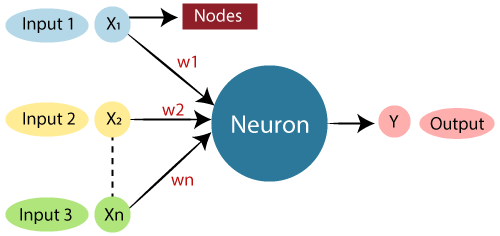

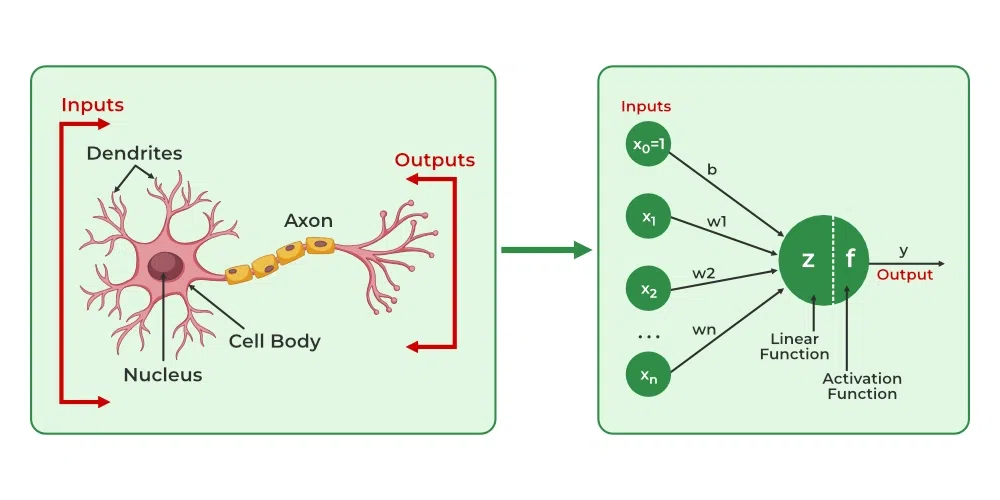

An artificial neural network is a type of artificial intelligence that takes inspiration from biology and mimics the structure of the human brain. It is a computational model designed to replicate the intricate networks of biological neurons found in the human brain. Artificial neural networks consist of interconnected nodes, or neurons, organized in layers similar to the neural connections in a real brain.

*static.javapoint.com

Understanding Artificial Neural Networks

Artificial Neural Networks (ANNs) are a subset of machine learning algorithms inspired by the biological neural networks of the human brain. Composed of interconnected nodes, or “neurons,” ANNs process information through layers of computation, enabling them to recognize patterns, make predictions, and learn from data.

An ANN typically consists of three types of layers:

Input Layer

Receives raw data or features.

Hidden Layers

Process the data through weighted connections.

Output Layer

Produces the final prediction or classification.

The strength of ANNs lies in their ability to learn and adapt based on training data, adjusting the weights of connections to minimize errors and optimize performance.

Key Components of Artificial Neural Networks

Key components of artificial neural networks include:

Neurons (Perceptrons)

The fundamental units of an artificial neural network are neurons, which receive input signals, process them using an activation function, and produce output signals. Each neuron is connected to other neurons through weighted connections that determine the influence of input signals.

Layers

Artificial neural networks are organized into layers, including input layers, hidden layers, and output layers. Input layers receive data, hidden layers perform computations, and output layers produce final results or predictions.

Activation Functions

Neurons within artificial neural networks use activation functions to introduce non-linearities into the model, allowing the network to learn complex patterns and relationships in the data.

Weights and Biases

Connections between neurons are characterized by weights, which determine the strength of the influence of one neuron on another. Biases are additional parameters that shift the output of neurons, improving the flexibility and performance of the network.

Training Algorithms

ANNs learn from labelled training data using various learning algorithms, such as backpropagation. During training, the network adjusts its weights and biases to minimize the error between predicted outputs and actual targets.

Types of Artificial Neural Networks

Artificial Neural Networks (ANNs) encompass a diverse range of architectures and configurations, each tailored for specific tasks and data characteristics. Here are some common types of artificial neural networks, each designed to address different challenges and learning paradigms:

Feedforward Neural Networks (FNNs)

- Feedforward neural networks are the simplest form of neural networks where information flows in one direction—from input nodes, through hidden layers, to output nodes.

- They are commonly used for tasks like classification and regression, where the input data is mapped to an output without feedback connections.

Recurrent Neural Networks (RNNs)

- Recurrent neural networks are designed to process sequences of data by maintaining a state that captures information about previous inputs.

- RNNs have connections that form directed cycles, allowing them to exhibit dynamic temporal behaviour and handle sequential data.

- They are effective for tasks like speech recognition, language modelling, and time series prediction.

Convolutional Neural Networks (CNNs)

- Convolutional neural networks are specialized for processing grid-like data, such as images or time-series data.

- They use convolutional layers to extract hierarchical representations of spatial features, followed by pooling layers to reduce spatial dimensions.

- CNNs excel in tasks like image classification, object detection, and image segmentation.

Long Short-Term Memory (LSTM) Networks

- LSTM networks are a type of recurrent neural network designed to capture long-range dependencies in sequential data.

- They incorporate memory cells that can retain information over extended time intervals, making them suitable for tasks requiring context awareness, such as machine translation and speech synthesis.

Generative Adversarial Networks (GANs)

- Generative adversarial networks consist of two neural networks—the generator and the discriminator—that compete against each other.

- GANs are used to generate synthetic data samples that are indistinguishable from real data, making them valuable for tasks like image generation, data augmentation, and anomaly detection.

Autoencoders

- Autoencoders are unsupervised learning models that learn to encode input data into a compressed representation (encoding) and decode it back to the original form (decoding).

- They are useful for dimensionality reduction, feature extraction, and denoising.

Deep Belief Networks (DBNs)

- Deep belief networks are composed of multiple layers of latent variables, each layer representing higher-level abstractions of the input data.

- DBNs are used for unsupervised learning, feature learning, and collaborative filtering tasks.

Applications of Neural Network

Neural networks, a fundamental component of artificial intelligence (AI), find widespread applications across diverse industries, leveraging their ability to learn from data and make complex decisions. Here are some key areas where neural networks are extensively applied

Image Recognition and Computer Vision

Neural networks, particularly Convolutional Neural Networks (CNNs), excel in tasks related to image recognition and computer vision. They enable accurate object detection, image classification, facial recognition, and scene understanding. Applications range from autonomous vehicles and robotics to medical imaging and quality control in manufacturing.

Natural Language Processing (NLP)

Neural networks, including Recurrent Neural Networks (RNNs) and Transformer-based models, revolutionize natural language processing tasks. They power language translation, sentiment analysis, chatbots, text summarization, and speech recognition. Leading NLP models like BERT and GPT-3 showcase the prowess of neural networks in understanding and generating human language.

Healthcare and Biomedicine

Neural networks contribute significantly to healthcare, facilitating medical image analysis, disease diagnosis, drug discovery, and personalized treatment recommendations. Applications include MRI image segmentation, predicting patient outcomes, identifying patterns in genomics data, and optimizing drug compounds.

Finance and Business Analytics

In finance, neural networks play a vital role in fraud detection, stock market prediction, credit scoring, and algorithmic trading. They analyze vast datasets, identify patterns, and provide insights for risk assessment, customer segmentation, and optimizing business operations.

Autonomous Systems

Neural networks power autonomous systems such as self-driving cars, drones, and industrial robots. They process sensor data in real-time, enabling intelligent decision-making and navigation in dynamic environments. Neural networks enhance safety, efficiency, and reliability in autonomous applications.

Gaming and Entertainment

In the gaming industry, neural networks enhance player experiences through adaptive gameplay, character animation, and natural language interaction. They drive innovations in Virtual Reality (VR), Augmented Reality (AR), and personalized content recommendation systems in entertainment platforms.

Environmental Monitoring and IoT

Neural networks contribute to environmental monitoring by analyzing satellite imagery, weather data, and sensor readings. They support predictive modelling for climate change, air quality monitoring, and optimizing energy consumption in smart cities and IoT ecosystems.

Cybersecurity

Neural networks bolster cybersecurity by detecting anomalies, identifying malicious activities, and enhancing threat intelligence. They analyze network traffic patterns, identify potential threats, and automate responses to cybersecurity incidents.

Neural Network in Artificial Intelligence

Neural networks are a cornerstone of Artificial Intelligence (AI), mimicking the functioning of the human brain to process information, learn from patterns, and make intelligent decisions. These networks comprise interconnected nodes, or neurons, organized into layers that perform specific tasks. Here’s how neural networks are used in AI:

Learning from Data

Neural networks excel at supervised learning tasks, where they analyze labelled data to recognize patterns and make predictions. They adjust their internal parameters through a process known as backpropagation, optimizing performance based on training examples.

Deep Learning

Deep learning, a subset of neural network technology, involves networks with multiple hidden layers. This architecture allows neural networks to learn complex representations from raw data, enabling tasks like image recognition, natural language processing, and autonomous driving.

Pattern Recognition

Neural networks are adept at pattern recognition tasks, including image and speech recognition, handwriting analysis, and object detection. They learn hierarchical representations of features, enabling accurate classification and identification of complex patterns.

Decision Making

In AI, neural networks aid decision-making processes by processing input data and generating output predictions or classifications. They enable systems to interpret sensory information, adapt to changing environments, and make real-time decisions in various applications.

Natural Language Processing (NLP)

Neural networks power advancements in natural language processing, enabling tasks such as machine translation, sentiment analysis, text generation, and chatbot interactions. Recurrent Neural Networks (RNNs) and transformer-based models like BERT have revolutionized NLP.

Reinforcement Learning

Neural networks are integral to reinforcement learning, where agents learn optimal strategies through trial and error. Deep reinforcement learning algorithms use neural networks to approximate value functions and policy functions, achieving human-level performance in games and robotics.

Robotics and Autonomous Systems

In robotics, neural networks enable autonomous systems to perceive the environment, plan trajectories, and execute complex tasks. They facilitate sensor fusion, motion control, and object manipulation, enhancing the capabilities of robotic systems.

Predictive Modelling

Neural networks are used extensively for predictive modelling in finance, healthcare, and business analytics. They analyze historical data, identify trends, and make forecasts, aiding in risk assessment, customer segmentation, and predictive maintenance.

How do Artificial Neural Networks Learn?

Artificial Neural Networks (ANNs) learn through a process of training using labelled data and specific algorithms designed to adjust the network’s parameters, such as weights and biases, to minimize errors in predictions. The learning process can be summarized into the following key steps:

Forward Propagation

- During training, input data is fed into the neural network through the input layer.

- Each neuron in the subsequent layers receives inputs from the previous layer, processes them using weighted connections and an activation function, and produces an output.

- The outputs from the final layer represent the network’s prediction for a given input.

Loss Calculation

- After generating predictions, the network calculates a loss (or error) metric that quantifies the difference between the predicted outputs and the actual targets in the labelled data.

Backpropagation

- Backpropagation is a critical step in the learning process of neural networks.

- The goal of backpropagation is to update the network’s parameters (weights and biases) to minimize the loss function.

- It involves computing the gradient of the loss function with respect to each parameter using the chain rule of calculus.

- The calculated gradients indicate how much each parameter contributed to the overall error, guiding adjustments to reduce errors in future predictions.

Gradient Descent Optimization

- With the computed gradients, the network updates its parameters in the opposite direction of the gradient to minimize the loss function.

- Gradient descent algorithms, such as Stochastic Gradient Descent (SGD) or Adam, are commonly used to update weights and biases iteratively.

Iteration and Training

- The process of forward propagation, loss calculation, backpropagation, and parameter updates is repeated iteratively over multiple epochs (training cycles).

- During each epoch, the network learns from the training data, gradually improving its ability to make accurate predictions.

Validation and Testing

- After training, the network is evaluated using a separate validation dataset to assess its generalization performance.

- The performance metrics on the validation set help in tuning hyperparameters and preventing overfitting.

- Finally, the trained network is tested on unseen test data to evaluate its performance in real-world scenarios.

Through this iterative process of forward and backward passes, artificial neural networks learn to recognize patterns, extract features from data, and make predictions. The ability to adjust parameters based on feedback from labelled data is what enables neural networks to generalize and perform well on new, unseen inputs, demonstrating their effectiveness in various machine learning tasks.

*www.geeksforgeeks.org

Importance of ANNs in the Field of Artificial Intelligence (AI)

Artificial Neural Networks (ANNs) play a crucial role in the field of Artificial Intelligence (AI) due to their ability to mimic the functioning of the human brain and process complex patterns and data. Here are some key reasons why ANNs are important in AI:

Pattern Recognition and Classification

ANNs excel at pattern recognition and classification tasks. They can automatically learn from large datasets and identify intricate patterns in data, making them invaluable for image and speech recognition, natural language processing, and other tasks requiring complex data analysis.

Non-linear Mapping

Traditional linear models have limitations in capturing non-linear relationships in data. ANNs, with their multiple layers and activation functions, can model highly non-linear relationships between inputs and outputs, enabling more accurate and flexible mappings in AI applications.

Feature Learning and Representation

ANNs can automatically learn relevant features from raw data, reducing the need for manual feature engineering. This capability is especially useful in image processing, where Convolutional Neural Networks (CNNs) can extract hierarchical features from pixel values.

Adaptability and Generalization

ANNs are adaptive and can generalize from training data to new, unseen data. They can adjust their internal parameters (weights) based on feedback from training data, enabling them to perform well on a wide range of tasks and datasets.

Fault Tolerance and Robustness

ANNs are inherently fault-tolerant and can handle noisy or incomplete data. They can still provide meaningful outputs even in the presence of input errors or missing information, making them robust in real-world applications.

Parallel Processing and Scalability

ANNs can be implemented efficiently on parallel computing architectures, allowing for faster training and inference on large-scale datasets. This scalability is crucial for AI systems deployed in real-time applications.

Deep Learning Capabilities

Deep learning, a subfield of AI powered by ANNs, has revolutionized many AI applications by leveraging deep neural networks with multiple layers. Deep learning models can automatically learn hierarchical representations of data, leading to state-of-the-art performance in tasks like image recognition, language translation, and game playing.

Enhanced Decision Making

ANNs enable AI systems to make informed decisions based on learned patterns and data. They can process complex inputs and provide actionable insights, supporting decision-making processes in various domains, including finance, healthcare, and autonomous systems.

Conclusion

Artificial neural networks represent a cornerstone of modern AI, enabling machines to perform tasks that were once exclusive to human cognition. With their vast applications and ongoing advancements, neural networks continue to redefine the possibilities of artificial intelligence.

MCA (Specialization: Artificial Intelligence) – Amrita Vishwa Vidyapeetham (Amrita AHEAD) equips students with in-depth knowledge of artificial neural networks and their applications. Join this program through Jaro Education to explore cutting-edge technologies, develop AI solutions, and shape the future of technology. Enroll now to become a leader in the AI-driven future.

Frequently Asked Questions

Artificial neural networks (ANNs) are inspired by the structure and function of the human brain. They consist of interconnected nodes (neurons) organized into layers, similar to the neurons in the brain. ANNs process information through these layers, allowing them to learn from data, recognize patterns, and make decisions.

Neural networks have diverse applications across industries. They are used for image recognition, natural language processing, autonomous vehicles, healthcare diagnostics, financial forecasting, robotics, gaming, and more. Neural networks enable tasks such as image classification, speech recognition, language translation, and predictive modelling.

Neural networks learn from labelled training data through a process called backpropagation. During training, the network adjusts its internal parameters (weights and biases) to minimize prediction errors. This iterative process allows neural networks to improve their performance over time and generalize to new data.

There are various types of neural networks designed for specific tasks. Some common types include feedforward neural networks (FNNs), recurrent neural networks (RNNs), convolutional neural networks (CNNs), long short-term memory (LSTM) networks, generative adversarial networks (GANs), and autoencoders. Each type has unique architectures and capabilities.

Neural networks are fundamental to AI because they enable machines to learn from data, recognize patterns, and make decisions without explicit programming. They contribute to advancements in image recognition, natural language understanding, robotics, and autonomous systems, driving innovation across various industries.